Strategic Chaos: Deconstructing Cognitive Warfare and the Blurring Lines of Influence

The digital age has ushered in an era where information is both a weapon and a shield. As a former intelligence analyst specializing in psychological operations, I’ve witnessed firsthand the evolution of influence tactics. Now, consulting on disinformation campaigns, I'm increasingly concerned about the subtle, yet profound, shift toward what is being termed "cognitive warfare." It’s a battle fought not on physical terrain, but within the minds of individuals and populations. The line between legitimate defense and manipulative overreach is becoming dangerously blurred.

Recent leaks, supposedly from a FOIA request for DARPA's (fictional) "Strategic Chaos" program in 2019, offer a chilling glimpse into potential strategies. While the documents are likely heavily redacted (and perhaps entirely fabricated), the language they employ warrants careful scrutiny. Let’s dissect the "Executive Summary," "Phase 1: Target Audience Analysis," and "Phase 2: Narrative Intervention Strategies" sections, comparing seemingly benign terms with established concepts in behavioral psychology, neuroscience, and information warfare.

This image depicts a brain fractured by digital screens showing social media, news headlines, and propaganda, symbolizing cognitive overload and manipulation. Government insignias subtly embedded hint at unseen forces.

Executive Summary: "Optimized Information Environments," "Algorithmic Amplification of Pro-Social Narratives," "Preemptive Cognitive Countermeasures"

The language here is carefully crafted. "Optimized Information Environments" sounds like a noble pursuit – a quest for clarity and truth. But what does "optimized" really mean? In the context of information warfare, it suggests a curated reality designed to elicit specific responses. It echoes the concept of "choice architecture" as described by Cass Sunstein and Richard Thaler in Nudge: Improving Decisions About Health, Wealth, and Happiness (Sunstein & Thaler, 2008). Nudging involves subtly influencing people's choices without coercion. However, when applied at a societal level, and orchestrated by state actors, the potential for manipulation becomes immense. It's one thing to design a cafeteria to encourage healthier eating; it's quite another to shape the entire information landscape to push a specific political agenda.

"Algorithmic Amplification of Pro-Social Narratives" is equally concerning. Algorithms, the silent arbiters of our digital experience, can be weaponized to elevate certain viewpoints while suppressing others. Social media platforms already grapple with accusations of bias in their algorithms. Imagine a scenario where government agencies actively manipulate these algorithms (or create their own) to promote narratives deemed "pro-social," subtly shaping public discourse and marginalizing dissenting voices. This could be achieved through shadowbanning, demotion of opposing content, or the amplification of strategically chosen "influencers."

"Preemptive Cognitive Countermeasures" sounds like a defensive strategy against disinformation. However, the term "preemptive" raises red flags. Are these countermeasures deployed before a threat is even identified? What constitutes a cognitive threat? Is it simply disagreement with the government's preferred narrative? Such measures could easily be used to stifle legitimate criticism and dissent, framing it as a foreign influence operation or a threat to national security.

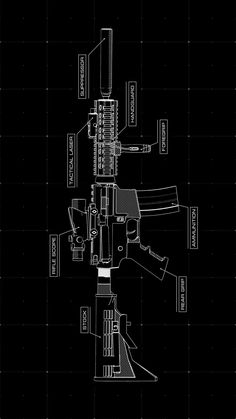

This detailed view highlights the unsettling combination of modern and historical propaganda techniques, representing the complex challenges of cognitive manipulation.

This detailed view highlights the unsettling combination of modern and historical propaganda techniques, representing the complex challenges of cognitive manipulation.

Phase 1: Target Audience Analysis – "Sentiment Mapping & Predictive Modeling," "Identification of Cognitive Vulnerabilities," "Psychographic Segmentation for Persuasion Efficacy"

This is where the rubber meets the road. "Sentiment Mapping & Predictive Modeling" leverages the vast amounts of data collected on individuals to understand their emotions, beliefs, and behaviors. While market research uses similar techniques, the scale and scope of government surveillance amplify the potential for abuse. Imagine using AI-powered sentiment analysis to identify populations susceptible to certain narratives, then tailoring disinformation campaigns to exploit those vulnerabilities.

"Identification of Cognitive Vulnerabilities" is particularly disturbing. The field of behavioral economics, popularized by Daniel Kahneman in Thinking, Fast and Slow (Kahneman, 2011), has identified numerous cognitive biases that affect our decision-making. These biases, such as confirmation bias (seeking information that confirms existing beliefs) and the availability heuristic (relying on readily available information), can be exploited to manipulate individuals. Identifying these vulnerabilities allows for precision-targeting of propaganda and disinformation.

"Psychographic Segmentation for Persuasion Efficacy" takes this a step further. It involves dividing the population into groups based on their psychological characteristics, values, and lifestyles. This allows for the creation of highly personalized messaging that resonates with specific segments, maximizing the effectiveness of persuasion attempts. This isn't just about selling products; it's about selling ideas, ideologies, and political agendas.

This image visualizes the intrusive nature of cognitive warfare and the fragility of the human mind when subjected to relentless manipulation.

This image visualizes the intrusive nature of cognitive warfare and the fragility of the human mind when subjected to relentless manipulation.

Phase 2: Narrative Intervention Strategies – "Precision-Targeted Messaging," "Counter-Narrative Inoculation," "Strategic Disruption of Misinformation Pathways"

"Precision-Targeted Messaging" is the culmination of the previous phases. It involves delivering customized propaganda and disinformation to individuals and groups based on their identified vulnerabilities and psychographic profiles. This allows for the creation of echo chambers, where individuals are only exposed to information that confirms their existing beliefs, further polarizing society.

"Counter-Narrative Inoculation" sounds like a benevolent attempt to protect people from disinformation. However, the concept of "inoculation theory" suggests that exposing individuals to weakened versions of opposing arguments can make them more resistant to those arguments in the future. In the context of cognitive warfare, this could involve deliberately spreading misinformation to discredit opposing viewpoints. It's a dangerous game of playing with fire.

"Strategic Disruption of Misinformation Pathways" raises questions about censorship and control of information. Who decides what constitutes "misinformation"? How are these pathways disrupted? Are we talking about shutting down websites, censoring social media posts, or even targeting individuals deemed to be spreaders of misinformation? The potential for abuse is significant.

This image subtly reveals the hidden influence of government agencies and military units in shaping public opinion and controlling information flows.

This image subtly reveals the hidden influence of government agencies and military units in shaping public opinion and controlling information flows.

Legal and Ethical Implications

If the "Strategic Chaos" program, or something like it, were proven to be operational, the legal and ethical implications would be profound. Does such a program violate free speech protections under the First Amendment? As Eugene Volokh, a renowned First Amendment scholar, has argued, the government has broad authority to communicate its views. However, there is a critical difference between persuasion and manipulation. If the government is actively deceiving or coercing citizens, it may be violating their constitutional rights.

Moreover, the use of psychological manipulation raises serious ethical concerns. Is it ever justifiable for the government to deliberately exploit cognitive vulnerabilities to influence public opinion? Where do we draw the line between legitimate defense measures and unlawful psychological manipulation? The history of psychological warfare, including controversies surrounding Operation Mockingbird (which allegedly involved the CIA's use of journalists for propaganda purposes), serves as a stark reminder of the potential for abuse.

This visual reinforces the theme of cognitive dissonance and the fragmentation of truth in the digital age, underscoring the dangers of strategic chaos.

This visual reinforces the theme of cognitive dissonance and the fragmentation of truth in the digital age, underscoring the dangers of strategic chaos.

Conclusion

The leaked (fictional) "Strategic Chaos" proposals offer a disturbing glimpse into the potential weaponization of behavioral psychology and information warfare. While the language may seem benign, the underlying concepts – optimized information environments, algorithmic amplification, preemptive countermeasures, precision-targeted messaging – raise serious concerns about government overreach and the manipulation of public opinion. As citizens, we must be vigilant in protecting our cognitive freedom and demanding transparency from our government. We must also cultivate critical thinking skills and resist the seductive allure of echo chambers and disinformation. The battle for our minds is already underway; it’s time to arm ourselves with knowledge and discernment.

SEO Keywords: Cognitive Warfare Tactics, Government Influence Operations, Strategic Psychological Manipulation, Narrative Warfare Strategies, Algorithmic Influence Techniques, Social Perception Management, Behavioral Psychology Applications (Government), Information Dominance Strategies, Cognitive Security Threats, Public Opinion Control Methods.

References:

- Kahneman, D. (2011). Thinking, fast and slow. Farrar, Straus and Giroux.

- Sunstein, C. R., & Thaler, R. H. (2008). Nudge: Improving decisions about health, wealth, and happiness. Yale University Press.

- Volokh, E. (2011). The mechanisms of the slippery slope. Harvard Law Review, 124(4), 1026-1086.

This image creates a visceral and unsettling viewing experience, reinforcing the theme of strategic chaos and the fragility of truth.

This image creates a visceral and unsettling viewing experience, reinforcing the theme of strategic chaos and the fragility of truth.