The Algorithmic Panopticon: Unmasking Bias in China’s Social Credit System

Image: Dystopian visualization of the Chinese Social Credit System, featuring faces overlaid with glitching facial recognition and oppressive surveillance elements.

I used to believe in the promise of AI. I was part of the wave, a consultant helping tech companies refine their facial recognition algorithms, chasing ever-higher accuracy scores. I saw the potential for good – improved security, streamlined processes, even better healthcare. But I also saw something else: the inherent capacity for misuse, for reinforcing existing inequalities, and for creating entirely new forms of oppression. The works of Shoshana Zuboff ("The Age of Surveillance Capitalism"), Joy Buolamwini ("Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification"), and Cathy O'Neil ("Weapons of Math Destruction") became my haunting companions, forcing me to confront the darker side of my work. Now, I'm here to talk about China's Social Credit System and the unsettling vulnerabilities lurking within its facial recognition infrastructure, vulnerabilities I fear are being deliberately exploited.

The All-Seeing Eye: A Brief Overview of the Social Credit System

China's Social Credit System (SCS) is a vast, nationwide initiative aimed at quantifying and shaping citizen behavior. While its exact implementation varies regionally, the core principle remains the same: individuals are assigned a "social credit" score based on their actions, ranging from financial transactions and adherence to traffic laws to online behavior and even their social circles. A high score can unlock privileges like easier access to loans or preferential treatment in education and employment, while a low score can result in restrictions, such as travel bans, exclusion from certain jobs, and public shaming. Facial recognition technology plays a crucial role in monitoring and enforcing this system, tracking citizens' movements and linking them to their social credit profiles.

Synapse Solutions: A Glimpse Behind the Curtain

My growing unease with the industry led me to sever ties with my consulting firm, but not before I came across something deeply troubling: an internal memo from a company called Synapse Solutions. Synapse, I learned through subsequent, discreet inquiries, was contracted to improve the facial recognition accuracy of the SCS, specifically in the Xinjiang region. The memo, dated October 2022, detailed a heated debate within the company regarding the difficulty of achieving equal accuracy across different ethnic groups, particularly the Uyghur population.

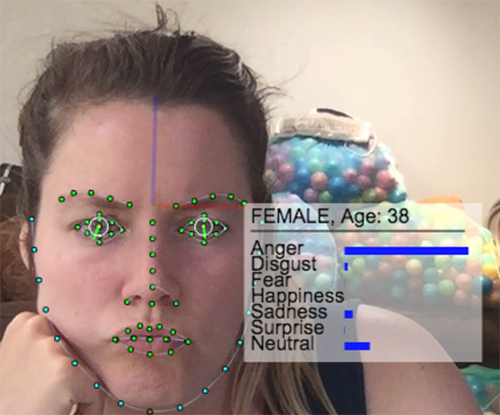

Image: Algorithmic bias visualized, with a glitching facial recognition box around a Uyghur face, highlighting misidentification.

Image: Algorithmic bias visualized, with a glitching facial recognition box around a Uyghur face, highlighting misidentification.

The memo alluded to the inherent challenges of training algorithms on datasets that are not representative of all populations. It cited variations in facial features, lighting conditions in different regions, and even the quality of available images as contributing factors. However, the most disturbing part was the discussion of potential "workarounds." These weren't presented as solutions to improve overall accuracy, but rather as methods to ensure a higher rate of false positives for specific populations.

"Workarounds" and Weaponized Bias

What did these "workarounds" entail? The memo was deliberately vague, but the implications were clear. They could include:

- Lowering Confidence Thresholds: Decreasing the confidence level required for a match, leading to more frequent misidentification of Uyghurs as individuals already flagged by the system.

- Bias Amplification: Intentionally skewing the training data to reinforce existing biases. For example, if the system already struggles to differentiate between Uyghur faces, the training data could be manipulated to exaggerate subtle differences, leading to even more errors.

- Feature Weighting: Assigning greater weight to specific facial features that are more prevalent within the Uyghur population, potentially leading to misidentification based on superficial similarities.

The memo also discussed the political pressure to deliver "results," even if those results were achieved through ethically questionable means. The chilling implication was that the goal wasn't simply to improve accuracy; it was to create a system that could be used to disproportionately target a specific ethnic group.

The Illusion of Objectivity: How Algorithmic Bias Reinforces Control

Even if these algorithmic flaws were initially unintentional – a product of biased training data or limitations in the technology – they can be easily exploited within the context of the Social Credit System. A higher rate of false positives for a specific population means that innocent individuals are more likely to be flagged, investigated, and penalized, even without any legitimate cause. This creates a climate of fear and self-censorship, discouraging dissent and reinforcing the government's control.

Image: Diverse faces under the scrutiny of facial recognition, emphasizing the potential for widespread surveillance.

Image: Diverse faces under the scrutiny of facial recognition, emphasizing the potential for widespread surveillance.

The problem, as O'Neil argues in "Weapons of Math Destruction," is that these algorithms are often presented as objective and neutral, masking the underlying biases that shape their outputs. When the Social Credit System flags someone based on a facial recognition error, it's easy to assume that the system is correct, that there must be a reason for the flag. This illusion of objectivity makes it difficult to challenge the system and hold it accountable.

Ethical Implications and the Urgent Need for Transparency

The development and deployment of technology that exhibits racial bias is a profound ethical failure. It's a failure that extends beyond the individual companies involved and implicates the entire AI community. We have a responsibility to ensure that our technologies are used to promote justice and equality, not to reinforce existing power structures and perpetuate discrimination.

Image: Symbolic representation of surveillance, with a single CCTV camera emphasizing the pervasive nature of monitoring.

Image: Symbolic representation of surveillance, with a single CCTV camera emphasizing the pervasive nature of monitoring.

What can be done?

- Demand Transparency: We need greater transparency in the development and deployment of facial recognition technology, including access to training data, algorithms, and performance metrics.

- Independent Audits: Independent audits are crucial to identify and address algorithmic biases. These audits should be conducted by experts who are independent of the companies developing and deploying the technology.

- Ethical Guidelines and Regulations: We need clear ethical guidelines and regulations to govern the use of facial recognition technology, including restrictions on its use for mass surveillance and ethnic profiling.

- Support Whistleblowers: We must protect and support whistleblowers who are willing to expose unethical practices within the tech industry.

The Future of Freedom in a Surveilled World

The Chinese Social Credit System serves as a stark warning about the potential dangers of unchecked technological surveillance. While the system is currently limited to China, the technologies and techniques being used are rapidly spreading around the world. We must act now to ensure that these technologies are used responsibly and ethically, before they are used to erode our freedoms and create a world where every aspect of our lives is monitored and controlled.

Image: QR codes forming a dystopian cityscape, representing the pervasive digital control and surveillance in the Social Credit System.

Image: QR codes forming a dystopian cityscape, representing the pervasive digital control and surveillance in the Social Credit System.

The memo from Synapse Solutions is just one piece of the puzzle, but it's a piece that reveals a disturbing truth: even well-intentioned efforts to improve accuracy can lead to dangerous outcomes when applied within a system designed for social control. We must remember that technology is not neutral. It reflects the values and biases of its creators, and it can be used to either empower or oppress. The choice is ours.

Image: Graphical representation of facial recognition accuracy disparities, highlighting lower accuracy for Uyghur individuals.

Image: Graphical representation of facial recognition accuracy disparities, highlighting lower accuracy for Uyghur individuals.

Image: Diverse faces looking directly at the viewer, symbolizing the universality of the surveillance issue.

Image: Diverse faces looking directly at the viewer, symbolizing the universality of the surveillance issue.

Image: Partially obscured face being scanned, representing the loss of privacy in a surveilled society.

Image: Partially obscured face being scanned, representing the loss of privacy in a surveilled society.

Image: A sky filled with CCTV cameras, symbolizing the oppressive feeling of constant surveillance and lack of privacy.

Image: A sky filled with CCTV cameras, symbolizing the oppressive feeling of constant surveillance and lack of privacy.