The Authenticity Illusion: Exposing the Social Influence Score and the Dead Internet Theory

Are we truly connecting with real people online, or are we increasingly interacting with sophisticated AI and bot networks, creating an illusion of genuine human engagement? The "Dead Internet Theory," a fringe conspiracy, posits that the internet is largely populated by non-human entities. While the theory itself may be speculative, it raises legitimate concerns about the proliferation of bots, AI-generated content, and the power of algorithms to shape our online experiences. Today, we delve into the shadowy world of "social influence scores" and "authenticity indexes," revealing how these systems allegedly function and how they might be used to subtly manipulate online discourse.

A glimpse into a server farm, the unseen engine behind the algorithms that shape our online reality, potentially housing the "Authenticity Index" we'll be discussing.

I'm Alex, a former cybersecurity consultant. I used to work for StreamSphere, a major social media platform you've undoubtedly heard of. I was part of the team developing their "Authenticity Index" (AI), a system designed to identify and flag inauthentic accounts. Initially, I believed in the mission: to combat bots and spam. However, I became increasingly disillusioned as I witnessed how the AI was deployed – often targeting legitimate users and dissenting voices. I'm speaking anonymously to ConspiracyTheorize.com to shed light on this issue, because the potential implications are far-reaching.

The Leaked Database: A Glimpse Behind the Curtain

A purported leak of StreamSphere's database schema and sample data has provided a disturbing window into the inner workings of this "authenticity" assessment system. The leak describes a complex AI that analyzes a multitude of factors to assign each user a score reflecting their perceived genuineness. This "social influence score" ostensibly dictates a user's visibility and reach on the platform. While I cannot independently verify the full authenticity of the leaked data, the schema aligns closely with the system I helped build.

So, how does this AI purportedly work? Let's break down the key components.

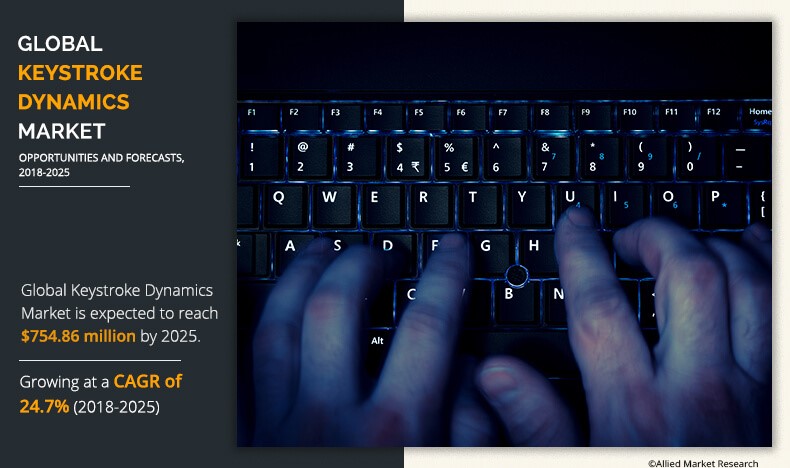

1. Keystroke Dynamics: The Rhythm of Reality

Keystroke dynamics seeks to distinguish human typing patterns from the consistent, often robotic input of bots.

Keystroke dynamics seeks to distinguish human typing patterns from the consistent, often robotic input of bots.

Keystroke dynamics analyzes the subtle variations in your typing. It's not just what you type, but how you type it. Humans exhibit unique rhythms, pauses, and pressure variations. Bots, on the other hand, tend to have more consistent, almost mechanical input patterns. The AI uses machine learning algorithms to identify these subtle differences, flagging accounts with abnormally consistent keystroke patterns as potentially non-human.

2. Linguistic Analysis: Deconstructing Your Digital Voice

Linguistic analysis scrutinizes vocabulary, syntax, and style to detect AI-generated or plagiarized content.

Linguistic analysis scrutinizes vocabulary, syntax, and style to detect AI-generated or plagiarized content.

This is where things get dicey. Linguistic analysis delves into the nuances of your writing style, vocabulary, syntax, and even your preferred topics. The AI attempts to identify patterns that are characteristic of AI-generated text or reused content. For example, repetitive phrasing, unusually formal language for social media, and deviations from expected stylistic patterns based on user demographics are all red flags.

The problem? This can easily be weaponized. I saw the system disproportionately flagging accounts critical of StreamSphere's data privacy policies as "inauthentic" based on "stylometric analysis" of their posts. Users who employed precise, technical language to articulate their concerns were penalized for sounding "too formal" or "AI-generated." This effectively silenced informed criticism under the guise of combating bots.

3. Network Analysis: Mapping the Social Web

Network analysis maps social connections, identifying suspicious clusters and engagement patterns characteristic of bot activity.

Network analysis maps social connections, identifying suspicious clusters and engagement patterns characteristic of bot activity.

Network analysis maps your social connections and identifies clusters of accounts with high reciprocity (mutual following and engagement) or anomalous linking patterns indicative of bot networks. If you frequently interact only with other accounts that have low "authenticity scores," your own score will suffer, regardless of your actual behavior.

This created a chilling effect. Accounts sharing alternative news sources were penalized due to "anomalous network connectivity" with known bot networks, even though the actual users operating them were demonstrably human. Because these alternative sources were often targeted by coordinated disinformation campaigns, their followers were automatically flagged as potentially inauthentic. This resulted in a significant reduction in the visibility of these news sources and their ability to reach a wider audience.

4. Biometric Data (Allegedly): The Most Disturbing Element

The most controversial aspect involves alleged correlation of online activity with anonymized biometric data, raising serious privacy concerns.

The most controversial aspect involves alleged correlation of online activity with anonymized biometric data, raising serious privacy concerns.

This is the aspect that truly horrified me. The leak suggests the system attempts to correlate your online activity with anonymized biometric data – facial recognition data from profile pictures, aggregated location data, and device sensor data – to verify consistency and rule out profile hijacking by botnets.

While StreamSphere always maintained that biometric data was anonymized and used solely for security purposes, the potential for abuse is immense. Imagine, for example, if your political views, inferred from your online activity, were used to "prove" inconsistencies in your biometric data, leading to a false "inauthentic" flag. The possibilities are terrifying.

The Consequences of a Low Authenticity Score: Digital Marginalization

Users with low authenticity scores face reduced visibility, shadowbanning, and limited access to platform features.

Users with low authenticity scores face reduced visibility, shadowbanning, and limited access to platform features.

A low "authenticity score" on StreamSphere, and likely other platforms using similar systems, can have a significant impact on your online experience:

- Reduced Visibility: Your posts are less likely to appear in other users' feeds.

- Shadowbanning: Your posts are visible only to you, effectively silencing you without your knowledge.

- Increased Scrutiny: Your content is subject to more rigorous review by content moderators.

- Limited Access: You may be restricted from using certain platform features, such as live streaming or monetization.

- Account Suspension: In extreme cases, your account may be suspended or permanently banned.

This creates a form of digital marginalization, where dissenting voices and alternative perspectives are systematically suppressed. The implications for free speech and open discourse are profound.

Real-World Concerns and Ethical Implications

The prevalence of bots and AI-generated content is a genuine concern. As of 2023, studies estimated that bots account for a significant portion of all internet traffic, with some reports suggesting numbers as high as 40%. The need to combat misinformation and malicious automation is undeniable. However, the implementation of algorithmic scoring systems like the Authenticity Index raises serious ethical questions.

These systems are inherently prone to bias. Algorithmic bias, stemming from biased training data or flawed algorithms, can lead to the disproportionate flagging of certain groups or viewpoints as "inauthentic." This creates a form of algorithmic censorship, where legitimate users are penalized for expressing unpopular or controversial opinions.

Algorithmic bias can lead to unfair or discriminatory outcomes in authenticity assessments.

Algorithmic bias can lead to unfair or discriminatory outcomes in authenticity assessments.

Furthermore, these systems often operate in a black box, lacking transparency and accountability. Users are given little or no insight into how their "authenticity score" is calculated or how to appeal a negative assessment. This lack of transparency breeds distrust and creates a chilling effect on free expression.

The use of biometric data, even anonymized, raises significant privacy concerns. The potential for re-identification and misuse is ever-present. The aggregation of sensitive data, combined with sophisticated AI algorithms, creates a powerful surveillance apparatus that can be used to monitor and control online behavior.

Is the Dead Internet Theory Real? A Question of Degree

The lines between human and AI-generated content are blurring, raising questions about the authenticity of online interactions.

The lines between human and AI-generated content are blurring, raising questions about the authenticity of online interactions.

While the Dead Internet Theory may be an exaggeration, it highlights legitimate concerns about the increasing prevalence of bots and AI-generated content online. The rise of sophisticated AI models like GPT-3 and its successors makes it increasingly difficult to distinguish between genuine human expression and artificial imitation.

The implementation of "authenticity indexes" and "social influence scores" further complicates the issue. While these systems are ostensibly designed to combat bots and spam, they can be easily weaponized to silence dissenting voices and manipulate online discourse.

Concerns about the power of tech companies to manipulate online narratives and suppress dissenting voices are growing.

Concerns about the power of tech companies to manipulate online narratives and suppress dissenting voices are growing.

Are we truly alone online, surrounded by a sea of bots and AI-generated content? Perhaps not entirely. But the increasing sophistication of AI, combined with the opaque and potentially biased nature of algorithmic scoring systems, raises serious questions about the authenticity of our online experiences. Are we connecting with real people, or are we simply interacting with sophisticated simulations designed to shape our thoughts and behaviors?

The question remains: are we truly connecting with real people online, or are we interacting with increasingly sophisticated simulations?

The question remains: are we truly connecting with real people online, or are we interacting with increasingly sophisticated simulations?

The answer, I fear, may be more complex and disturbing than we realize. It's time to demand greater transparency and accountability from tech companies and to critically examine the ethical implications of AI-driven content moderation. The future of free speech and open discourse depends on it.